Power Method Recap

Description of the Method

In class, we analyzed the power method for computing the top right singular vector, , of a matrix with singular value decomposition . The method when as followed:

Power Method:

Choose randomly. E.g. .

For

Return

In other words, each of our iterates is simply a scaling of a column in the following matrix:

where . Typically we run iterations, so is a tall, narrow matrix. The span of 's columns is called a Krylov subspace because it is generated by starting with a single vector and repeatedly multiplying by a fixed matrix . We will return to shortly.

Analysis Preliminaries

Let be the right singular vectors of (the columns of ). We write each iterate in terms of this basis of vectors:

Note that . Intuitively, we expect the relative size of to increase in comparison to the other coefficients as increases, which is good because then will start looking more and more like . Why do we expect this? Because and thus we can check that . The term is fixed for all , but the term will be largest for , and thus the will increase in size more than any other term. We will analyze this formally soon.

First however, let's see what it suffices to prove.

Claim 1: If for all than either or .

Proof. Since , the above would imply that . Since it follows that and thus . Then for any unit vector we have . Since we conclude that either or . Suppose without loss of generality it's the first: . Then we would conclude that .

So, to show power methods converges, our goal is to prove that for all .

Heart of the Analysis

To prove this bound, we note that for any and where is some fixed scaling. So:

As claimed in class (and can be prove as an exercise -- try using rotational invariance of the Gaussain ) if is a randomly initialized start vector, with high proability. That may see like a lot, but is going to be tiny number, so will easily cancel that out. In particular,

where is our spectral gap parameter. As long as we set

then , and thus

as desired.

Alternative Guarantee

In machine learning applications, we care less about actually approximating , and more that the is a "good top singular vector" in that it offers a good rank-1 approximation to . I.e., we want to prove that is small, or equivalently, that is large. The largest it could be is . And from the same argument above, where we claimed that either or , it is not hard to check that , so after we get a near optimal low-rank approximation.

The Lanczos Method

We will now see how to improve on power method using what is known as the Lanczos method. Like power method, Lanczos is considered a Krylov subspace method because it will return a solution in the span of the Krylov subspace that we introduced before. Power method clearly does this -- it returns a scaling of the last column of . The whole idea behind Lanczos is to avoid "throwing away'" information from earlier columns like power method, but instead to take advantage of the whole space. It turns at that doing so can be very helpful -- we will get a bound that depends on instead of .

Specifically, to definte the Lanczos method, we will let be a matrix with orthonormal columns that spans . In practice, you need to be careful about how this is computed for numerical stability reasons, but we won't worry about that for now. Imagine your computer has infinite precision and we just compute by orthonormalizing .

Lanczos Method

- Compute an orthonormal span for the degree Krylov subspace.

- Let be the top eigenvector of

- Return .

Importantly, the first step only requires matrix-vector multiplications with , each of which can be implemented in time, just like we did for power method. The second step might look a bit circular at first glance. We want an approximation algorithm for computing the top eigenvector of and the above method uses a top eigenvector algorithm as a subroutine. But note that only has size , where is our iteration count. So even if it's too expensive to compute a direct eigendecomposition of , can be computed in time (the first part is the time to construct and , the second to compute , and the third to find its top eigenvector using a direct method).

Analysis Preliminaries

Our first claim is that Lanczos returns the best approximate singular vector in the span of the Krylov subspace. Then we will argue that there always exists some vector in the span of the subspace that is significantly better than what power method returns, so the Lanczos solution must be significantly better as well.

Claim 2: Amongst all vectors in the span of the Krylov subspace (i.e., any vector that can be written as for some ), minimizes the low-rank approximation error .

Proof. First, we can prove that should always be chosen to have unit norm, so that is a projection. Accordingly, it must also be that has unit norm. Then, proving the claim above is equivalent to proving that maximizes . It is not hard to check that setting to be the maximum right singular vector of maximizes this amongst all unit vectors, and this right singular vector is the same at the top eigenvector of .

Claim 3: I f we run the Lanczos method for There is some vector of the form such that either either or .

In other words, there is some in the Krylov subspace that has a large inner product with the true top singular vector . As seen earlier for power method, this can be used to prove that e.g. is large, and from Claim 2, we know that the returned by Lanczos can only do better. So, we focus on proving Claim 3.

Heart of the Analysis

The key idea is to observe that any vector in the span of the Krylov subspace of size -- i.e. any vector that can be written is equal to:

for some degree q polynomial . For example, we might have or . And moreover, for any degree polynomial , there is some such that . To see that this is true, we note that all of the monomials lie in the span of , so any linear combination does as well.

This means that showing there is a good approximate top singular vector in the span of the Krylov subspace can be reduced to finding a good polynomial . Specifically, notice that, if we write in the span of the singular vectors we have:

where

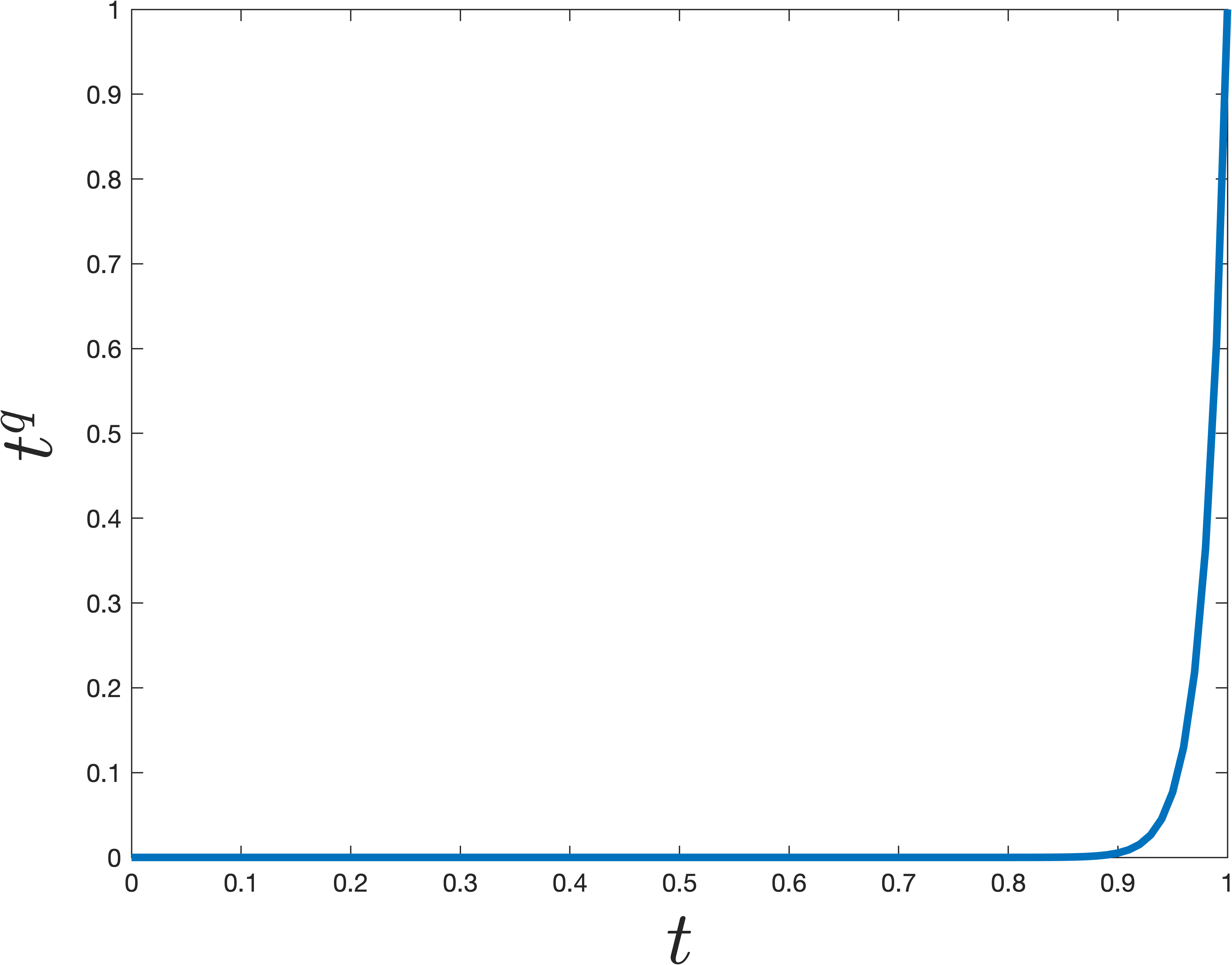

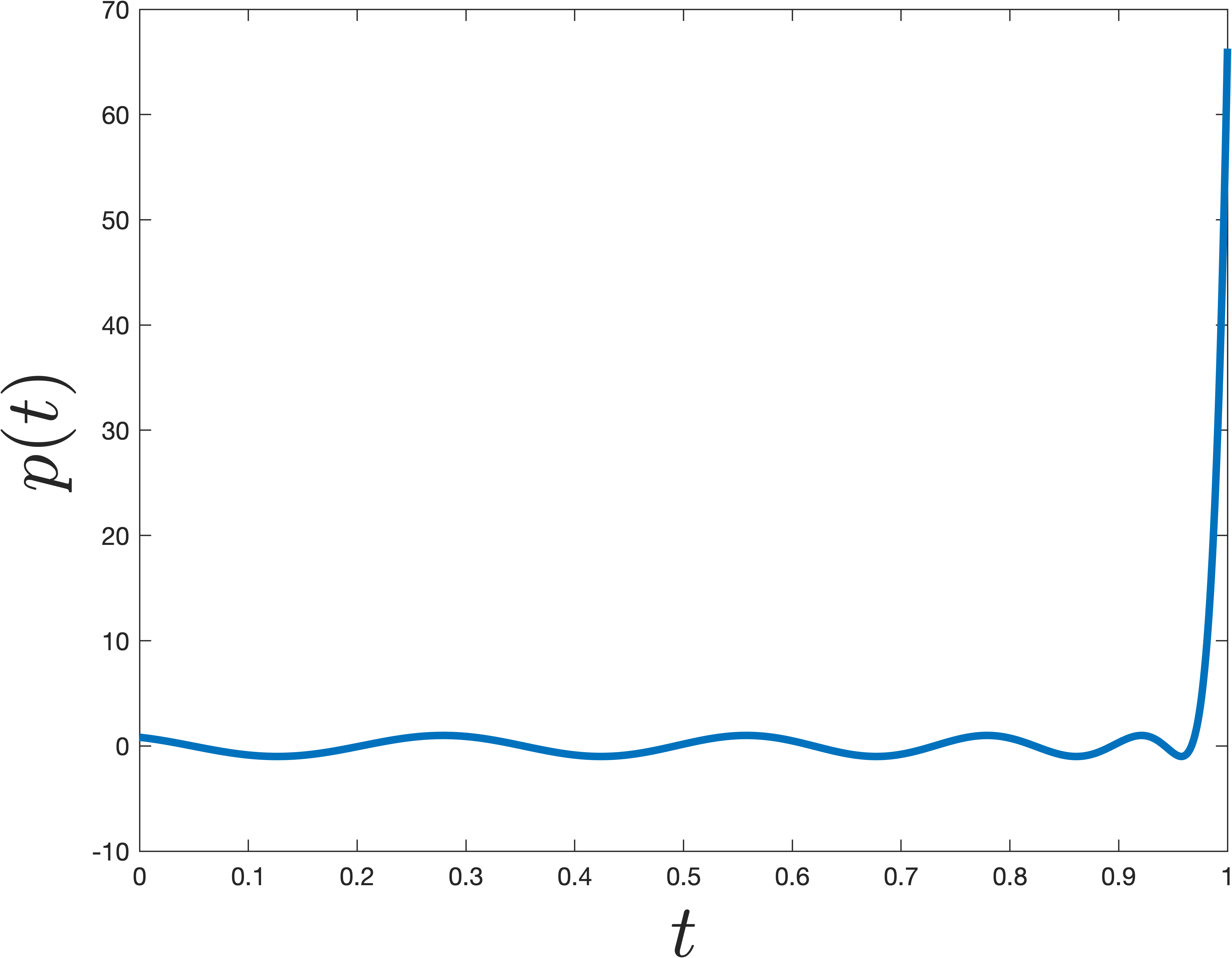

Our goal, which is exactly the same as when we analyzed power method, is to show that is much larger than for all . In other words we want to find a polynomial such that is small for all values of , but then the polynomial should suddenly jump to be large at . The simplest degree polynomial that does this is . However, it turns out there are more sharply jumping polynomials, which can be obtained by shifting/scaling a type of polynomials known as a Chebyshev polynomial. An example that jumps at is shown below. The difference from is pretty remarkable -- even though the polynomial shown below is nearly as small for all , it is much larger at .

Concretely we can claim the following, which is a bit tricky to prove, but well known (see Lemma 5 here for a full proof).

Claim 4: There is a degree degree polynomial such that and for .

In contrast, to ensure that satisfies the above, we would need degree q = -- a quadratically worse bound. This is what will account for the quadratic difference in performance between the Lanczos and Power Methods.

The fact that there exist much steeper low-degree polynomails than is the sort of thing studied in the mathematical field known as Approximation Theory. That might see pretty obscure, but steep polynomials are surprisingly useful in computer science, appearing everywhere from classic, to Quantum complexity theory, to learning theory. If you're interested in learning more about this, check out slides for this talk.

Finishing Up

Finally, we use Claim 4 to finish the analysis of Lanczos. Consider the vector , which as argued above lies in the Krylov subspace. As discussed below equation (7), our job is to prove that:

for all , as long as is chosen to have degree

Consider the numerator first. .

Accordingly, if we set where , then . And the denomenator is simply equal to . So, since with high probability, as argued earlier, (9) holds. And thus Claim 3 is proven by setting .